Collecting SNA data from “Dispatch”

For our SNA analysis we can use the same script that we used to collect toponymic data from articles, but here the lines of code that collected toponymic data from a given artice should be replaced with the lines of code that collect network data:

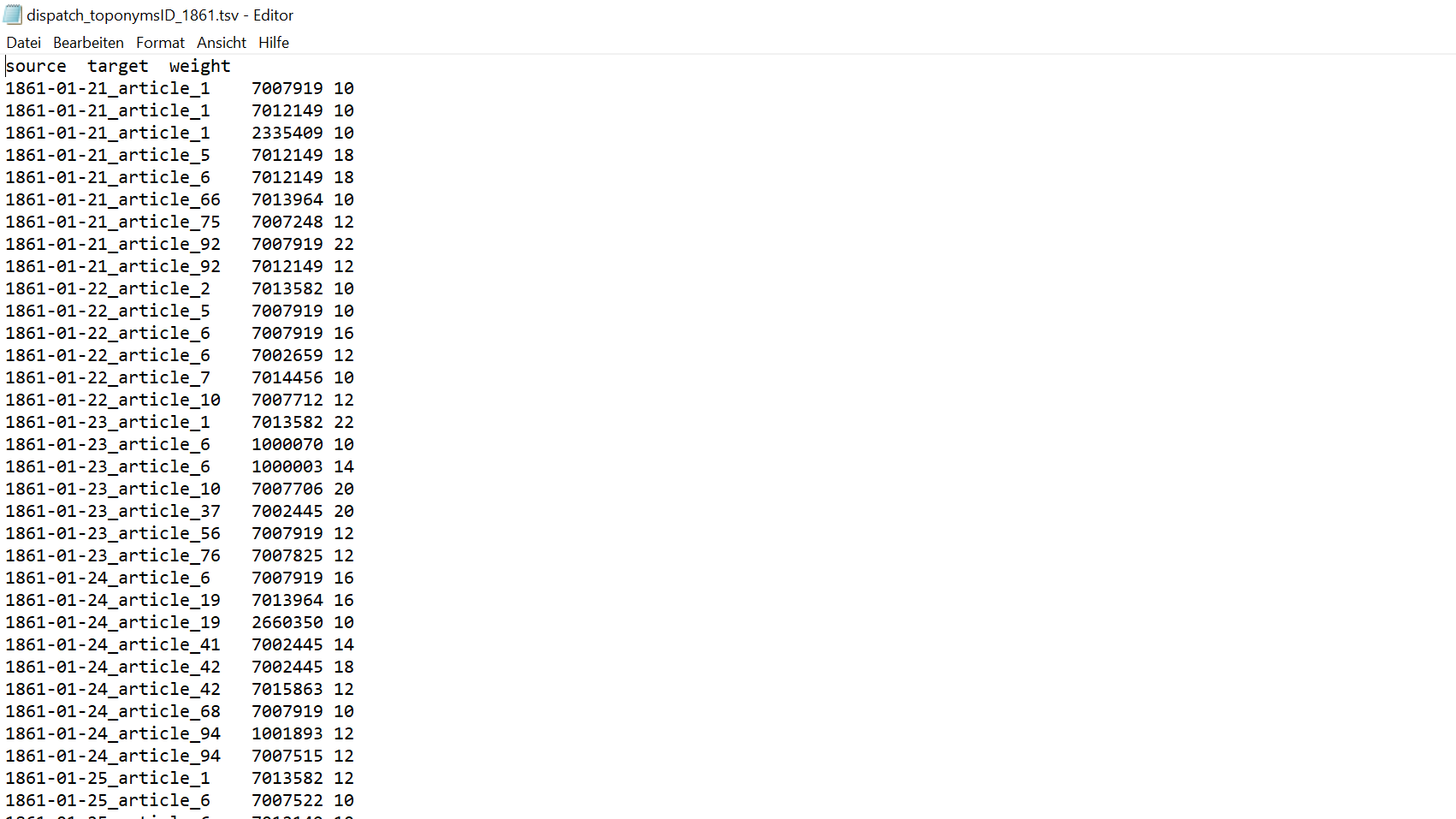

1) collect all relevant items and remove duplicates; 2) convert them into edges; 3) after all the edges are collected, we will need to count their frequencies; 4) the result should be a table (or, a csv/tsv file) with three columns: source, target, weight; 5) weight value can be counted in a number of different ways (by and large).

Script 1:

import re, os, csv

source = "C:/Users/Tatjana Smiljnic/Desktop/univie-tnt-2019.github.io/Lesson_10/tgn_xml_0619/" # xml files

oldPath = "C:/Users/Tatjana Smiljnic/Desktop/univie-tnt-2019.github.io/Lesson07/wget-activehistory/" #first dispatch

newPath = "C:/Users/Tatjana Smiljnic/Desktop/univie-tnt-2019.github.io/Lesson_13/" #new path to save the files

lof = os.listdir(oldPath) # making a list of files from the dispatch xmls

def generate(filter): #function where filter will be our input (the year)

dataList = {}

for f in lof: #looping through our XML files

if f.startswith("dltext"): # fileName test

with open(oldPath + f, "r", encoding="utf8") as f1:

text = f1.read() # adding the content of the file

text = text.replace("&", "&") # cleaning the content of the file (that we do not need)

# find the date:

date = re.search(r'<date value="([\d-]+)"', text).group(1)

if date.startswith(filter): # making sure the output only includes data for the input year

c = 0

allarticles = re.findall(r'(?s)<div3 type="article"(.*?)</div3>', text)

for article in allarticles:

c += 1

# creating an ID:

ID = date+"_article_"+str(c)

for tg in re.findall(r"(tgn,\d+)", article): # finding the tgn information

tgn = tg.split(",")[1] # reducing the tgn info to the tgn-number

key = ID + '##' + tgn # creating a key from ID and tgn to count the frequency (with weight) of the occurence

# writing the dict, when duplicates are found count up the frequency, else (othervise) write 1:

if key in dataList:

dataList[key]['weight']+= 1

else:

dataList[key] = {

'source': ID,

'target': tgn,

'weight': 1

}

# saving the data in csv format:

tsv_columns = ['source', 'target','weight']

with open("dispatch_toponymsID_%s.tsv" % filter, "w", newline='', encoding="utf8") as f9:

writer = csv.DictWriter(f9, delimiter ='\t',fieldnames=tsv_columns)

writer.writeheader()

for data in dataList:

if dataList[data]['weight'] >= 10: # only consider data with a frequency equal to or higher as 10

writer.writerow(dataList[data])

# using our function for different years and choosing 1861:

generate("1861")

Script 2:

import re, os, csv

# loading edges-data into a dictionary (with following function):

def loadTGN(tgnTSV):

with open(tgnTSV, "r", encoding="utf8") as f1:

data = f1.read().split("\n")

dic = {} # making the dict we will use

for d in data: # looping through every line

d = d.split("\t") # splitting the data at TABs

dic[d[0]] = d # filling the dict with the keys and values

return(dic)

# matching our data-files/tgn with the edges-data/tgn (with following function):

def match(freqFile, dicToMatch):

with open(freqFile, "r", encoding="utf8") as f1:

data = f1.read().split("\n")

#creating all the lists we need:

dataNew = []

dataNewNA = []

for d in data[1:]: # looping through our edges-file

try:

tgnID = d.split("\t")[1] # adding the tgn-number so we can compare it to the one in the dict

if tgnID in dicToMatch: # matching places to the dict by tgn-number

val = "\t".join(dicToMatch[tgnID]) # variable for the entry in the dict

if "\tNA\t" in val: # creating lists with the toponymes with and without any coordinates

dataNew.append(val) # appending all the places to the list (but as well those without coord) in case gephi does not like to have missing knots

dataNewNA.append(val)

else:

dataNew.append(val)

print("%s not in Gaz!" % (tgnID)) # all states which tgnID could not be found in Gazzetter

except: print("ungültiger Inhalt: "+d)

header = "ID\tLabel\tlat\tlon\n"

# creating a file with all the toponymes with their coordinates:

with open("nodes_"+freqFile, "w", encoding="utf8") as f9a:

f9a.write(header + "\n".join(dataNew))

# creating a file with all the toponymes without coordinates:

with open("nodes_onlyNA_"+freqFile, "w", encoding="utf8") as f9b:

f9b.write(header + "\n".join(dataNewNA))

dictionary = loadTGN("tgn_data_light.tsv") # variable for the Getty Gazetteer we cleaned up

# running the two functions using our toponyms and the "tgn_data_light.tsv"

match("dispatch_toponymsID_1861.tsv", dictionary)

Results